In Search of the Grammar of Robot Applications

I’ve been searching for ways to make robot application programming easy[^1]. Here are my insights on the challenges in programming robot applications and my unfulfilled ideas for (dramatically) simplifying the process.

Challenges with using existing robot behavior representations

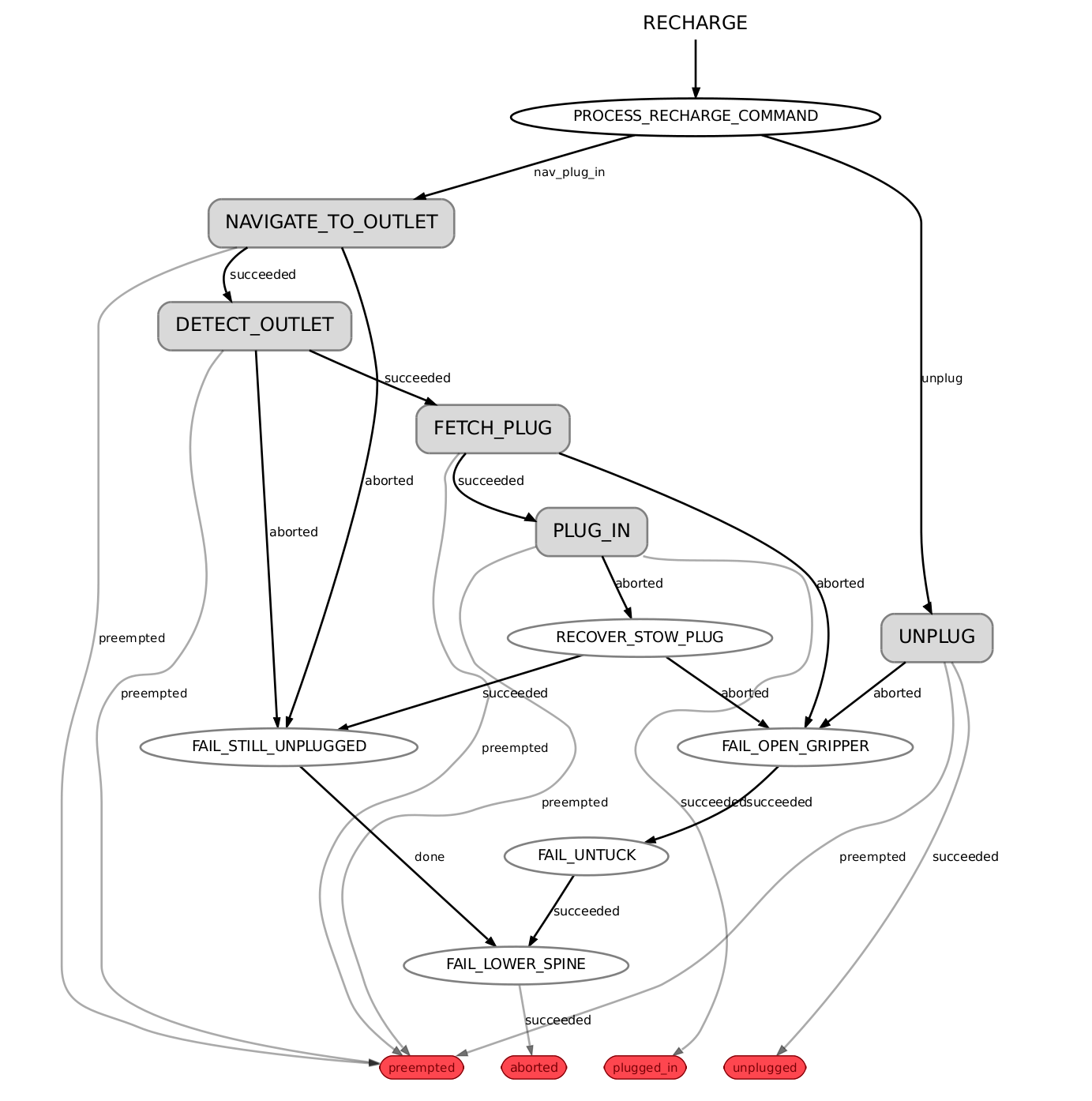

In the past, I viewed a robot application as an application program that executes a (high-level) robot behavior. The two most popular representations for implementing robot behaviors were (and still are) finite state machine (FSM) and behavior tree (BT). Thus, programming robot applications usually meant programming FSMs or BTs. In theory, FSMs or BTs are straightforward to program. They have well-defined semantics (e.g., mathematical definitions), which makes them predictable and reasonable, and they natively support composition, enabling the creation of complex behaviors by reusing simpler ones.

Programming robot applications with FSMs or BTs wasn’t so easy in practice. The FSM and BT implementations I’ve met in the wild lacked clear semantics or gradually lost them over time, making them difficult to work with. Frequently, I came across ill-defined transition functions that made it challenging to determine the triggers and conditions for transitions. I often found side-effect-causing code snippets scattered across multiple states, used to force transitions or trigger actions instead of properly extending the transition function. As a result, the FSMs became unpredictable and difficult to test. Regarding BTs, I encountered subtleties specific to the implementation or programming language, e.g., in how the execution logic handles the “tick,” or misuses of BT features, e.g., abusing blackboard, that invariably led to complications[^2].

Creating complex behaviors wasn’t as easy as it appeared to be. The most significant challenge I’ve faced was the maintenance of modules. Driven by the composability of FSMs or BTs, developers often created intermediate FSM states or BT nodes (referred to as “modules”) to represent simple skills or patterns. While developers intended these modules to be reusable, without proper planning, it was all too easy to end up with numerous modules that hindered the creation of complex behaviors.

In most robotics (software) companies, the abovementioned challenges weren’t insurmountable. Given the utmost importance of maintaining robust robot applications, companies could readily allocate ample resources to ensure the predictability and composability of FSMs and BTs. This could be achieved through extensive discussions, refactoring, testing, and more.

FSMs and BTs have been serving the robotics community well, and likely, they will continue to do so, and yet, I didn’t think using them was the future. My primary concern with using FSMs and BTs was that they tend to nudge developers to view the robot as a solitary and central entity when determining the main flow of the application program. In the RaaS companies I worked at, robot applications almost always involved multiple robots, such as fleets of indoor mobile robots or lines of robot manipulators. Even when the application focused on the behavior of a single robot, the underlying system was a distributed one comprising robotics services (e.g., perception, control) and external services (e.g., user interface, scheduler). Consequently, application developers needed to think in terms of multiple robots or services and their interactions. In such a context, it made more sense to regard a robot application as a program that implements the orchestration logic of a distributed system.

Inspirations from non-robotics communities

When reactive programming was making a buzz in the WebDev community, I stumbled upon Cycle.js and fell in love with it immediately. I loved how Cycle.js’ language-agnostic core concepts, like functional reactive programming paradigm and ports and adaptors architecture, seemed to be transferrable to robot application programming. I could imagine robot applications with complex interruption signals, e.g., consisting of external and internal signals like user input and system error signals, or more generally complex interaction flows, could be concisely expressed in a functional reactive programming paradigm. By following ports and adaptors architecture, Cycle.js enforces a separation of side-effect-making code from the application logic, and it made testing a breeze, especially with test tooling that often came with reactive stream libraries like ReactiveX. It seemed that testing robot applications could benefit from such tooling as well.

Charmed by the initial impressions, I experimented with applying the core concepts from Cycle.js directly to robot application programming. There were some initial successes. It delivered on simplifying authoring complex interactive programs and testing such programs. However, I eventually faced major challenges. State management in Cycle.js-like frameworks was awkward, especially when it came to composing finite state machines, e.g., requiring techniques that are hard to get right, like creating circular dependencies between streams, which was a showstopper because the creating FSMs must be well-supported for the robotics community. Adopting Cycle.js’ core concepts also didn’t help much with the challenges of building robust applications for distributed robotics systems, such as dealing with unresponsive services, rare process crashes, and subtle performance regressions. Given such experiences, I felt the strong need for a higher-level abstraction that allows developers to work directly in the application space without worrying about system-level problems[^3].

Looking for new ideas, I explored tools and techniques from the DevOps community, known for their rich experiences working with (large) distributed systems. The community’s proficiency in employing the declarative approach, i.e., describing the desired state and automating the remaining steps, emphasizing robustness and resilience, was evident in tools (e.g., Kubernetes) and processes (e.g., GitOps)[^4]. I liked what I found, but it wasn’t immediately apparent how to apply these findings to the domain of robot application programming. At the time (2017~2018), DevOps tools were primarily tailored to specific environments (e.g., the cloud) and technologies (e.g., containers) that were foreign to robotics systems. Nevertheless, as DevOps is a methodology, adopting its practices in the robotics domain has been ongoing[^5]. I hope to catch up and revisit the adoption in a future post.

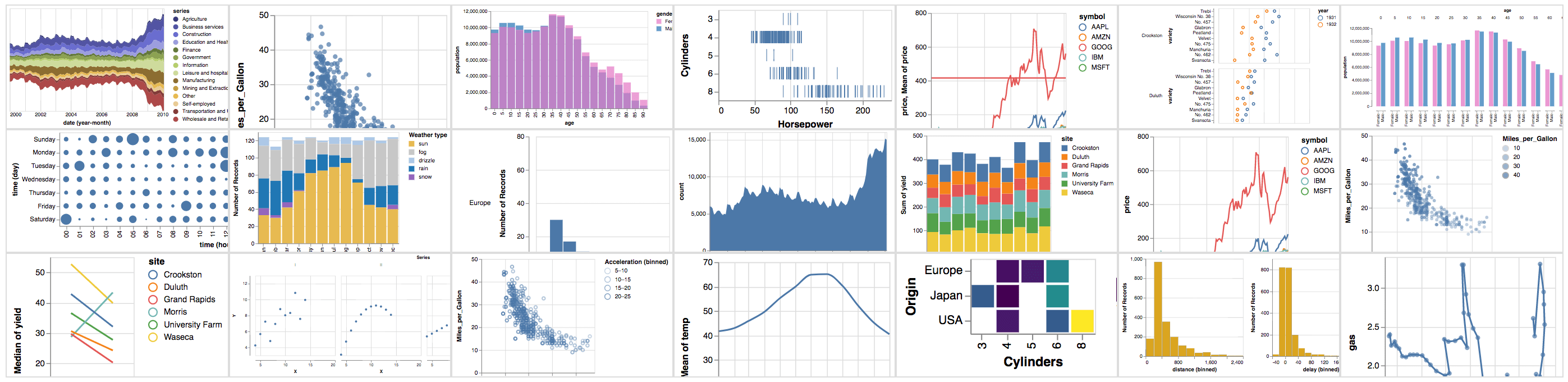

Perhaps the biggest inspiration came from the grammar of graphics[^6]. The graphics of grammar offered a concise and structured way to build and explore a large space of data visualization quickly. There are three core layers of the graphics of grammar:

- Data is a data frame containing one or more variables. Fundamentally, data represents categorizable inputs to a visualization system.

- Aesthetic defines the mappings of one or more variables to one or more visual elements. Fundamentally, Aesthetic maps the categorizable inputs to entities in the application space.

- Geometry decides the type or shape of the visual elements. Fundamentally, Geometry gives precise meanings to the mapped entities.

We can apply the fundamental structure to the robot application programming domain and build the “grammar of robot applications,” for example:

- Robot layout defines the physical arrangement of robots and other structures, for example, a layout of a manufacturing line and robot cells.

- Task mapping defines how robots and devices map to particular tasks, e.g., insertion, inspection, etc.

- Task detailing defines details of the assigned tasks, possibly even the interaction of multiple robots and devices.

It’s a rudimentary idea–I don’t know how exactly this grammar will address distributed robotics systems problems like unresponsive services, rare process crashes, and subtle performance regressions. But one could imagine defining such a grammar or declarative specification that can express the space of particular robot applications, e.g., the space of manufacturing line applications or the space of indoor delivery applications. Then, the task of developers or solution engineers would be to write a configuration that precisely describes a particular application in mind. Construction of such a grammar will be nontrivial. It will require deep understanding and careful organization of the application that will require collaboration with domain experts per application domain. However, I believe this is the direction that can accelerate the adoption of intelligent robotics services in the target industry.

(Update 2023/04/01) There’s been a lot of hype on how ChatGPT–or Large Language Model (LLM)-driven programming synthesis, more generally–will take away programming jobs. While I don’t fully agree with such hype[^7], I do think ChatGPT will change programming significantly[^8]. What’s interesting to me is that the goal of LLM-driven programming synthesis (LLMSynth) and a grammar-based specification (GSpec) are similar; it is to reduce the huge search space of programming and hence enable developers to concisely describe intended programs. However, the two take polar opposite approaches; LLMSynth takes the expensive machine learning approach (e.g., requiring lots of data and computing power), and GSpec takes the laborious design approach (e.g., requiring careful design efforts from humans). As a result, LLMSynth is highly capable (i.e., can do many programming tasks) but not super-precise (i.e., return incorrect programs, sometimes), and GSpec is precise but not as expressive (i.e., can’t do not-designed tasks) but always returns correct programs. Although I do like to use ChatGPT/Bard/CoPilot, I think using grammar is better for building robot applications because the space of possible programs can be scoped[^9], and the cost of running incorrect problems is extremely high.

What’s also interesting to me is the rise of No-code[^10] in the last ~5 years. Like LLMSynth and GSpec, No-code also shares the goal of simplifying programming and takes a more similar approach to GSpec (than that of LLMSynth), e.g., NoCode tools or visual programming interfaces are designed and built manually (and likely not involve machine learning). The main difference is the level of expressivity. Because of their focus on visual programming (targeting non-programmer users), No-Code tools tend to expose a subset of programming interfaces or high-level abstractions to a visual programming interface, resulting in a lower output program space than that of GSpec. It’s possible for a No-Code tool can expose a high-level grammar, but I haven’t seen tools like that except Tableau. Btw, No-Code tools have other issues beyond the limited expressivity, e.g., the (different) challenge of supporting non-programmer users and keeping visual programs maintainable.

Closing notes

I wrote this post to share the idea that I’m excited about–the grammar of robot applications–in its very early form to encourage readers to consider designing a grammar/DSL/declarative specification approach when building their next robot programming tool. But this post wasn’t just about that. I shared my observations on the challenges associated with robot application programming and things I’ve tried, e.g., applying tools and techniques from other communities, in the hope of inspiring others to consider taking cross-over approaches–if makes sense.

Let me know what you think by leaving comments below or messaging me on LinkedIn or Twitter!

- Do my experiences/approaches resonate/align (or not resonate/align) with yours?

- Do you have any robotics (application) problems that would benefit from introducing a DSL?

I’d love to hear your thoughts.

Footnotes

[^1] I wrote a thesis on this topic; this post shares my explorations regarding developer tools that didn’t make into my thesis.

[^2] For more insights, see Overcoming Pitfalls in Behavior Tree Design.

[^3] Maybe something like Wasp and Temporal combined.

[^4] For gentle introductions, see What is Kubernetes | Kubernetes explained in 15 mins

and What is GitOps, How GitOps works and Why it’s so useful

[^5] awesome-cloud-robotics provides some ideas.

[^6] For a gentle introduction, see Introduction to the Grammar of Graphics

[^7] Check out Large language models will change programming… a little

by Amy Ko.

[^8] Check out Large language models will change programming … a lot by Amy Ko.

[^9] Interestingly some use LLMSynth and DSL (and not grammar) together and it works?

[^10] For examples, check NOCODE.TECH and NodeCodeList.